Other parts of this series:

- Building a digital monetary authority for a digital age

- Next-gen central banking | Pillar 1: Harness the power of data

- Next-gen central banking | Pillar 2: Enable and drive innovation

- Next-gen central banking | Pillar 3: Drive efficiency

- Next-gen central banking | Pillar 4: Communicate effectively

- Next-gen central banking | Part 5: Building the workforce of the future

Our first post identified five areas central banks should focus on to become the digital regulators of tomorrow. Let’s take a closer look at the first pillar.

Pillar 1: Harness the power of data

Most central banks base their decisions on traditional information flows, yet these can suffer from a range of issues: much data is not received on a timely basis; data sources can be limited and most of the data is used for reporting rather than applying analytics to leverage it for insights—including possible early warnings.

Even in the digital age, large amounts of the data that central banks do collect are based on periodic, retrospective reporting from financial institutions (FIs) that might not reflect the most up-to-date status of the financial services (FS) sector or the economy.

This current reporting mechanism constrains central banks in carrying out their regulatory, monetary and supervisory functions in this fast-paced digital era. That’s partly why the Monetary Authority of Singapore decided in 2017 to establish its Data Analytics Group: it wanted to improve how it uses data and analytics in order to perform its responsibilities better and to make regulatory compliance more efficient for FIs.¹

Central banks encounter other difficulties too. Supervisory departments, for example, may face challenges in collecting less defined or unstructured data from FIs, determining what is relevant, deciding to what extent aspects are comparable across the FS sector and then generating a single, comprehensive picture. As a result, it can take months before enough quality data is collected and analyzed to provide meaningful insights and generate a comprehensive view.

Broadly speaking, central banks’ key data difficulties include:

- Data is collected retrospectively and for predefined intervals. Consequently, data consists of lagging indicators rather than being in real time (or near-real time).

- The quality of data is at times inconsistent and it can take weeks to validate and rectify it with iterative cycles.

- Data is often siloed internally within a central bank’s divisions, with each having different policies, standards and requirements.

- The data-request process is lengthy. This brings challenges in providing consistent definitions for example, as well as in modeling new data elements and defining reporting specifications.

- Much data is provided in aggregated format and lacks sufficient granularity to perform drill-downs and develop accurate analytical modeling.

- Assessing and processing vast volumes of data using traditional reporting tools may not be thorough.

- Data is typically drawn from traditional sources of uni-directional reporting by FIs—and there is limited use of alternative sources.

- There are also challenges around qualitative data. In our observation, a large part of the examination done by supervisors involves applying their judgment—reading thousands of pages of documents in order to assess all areas of an FI. This manual process is prone to errors and inconsistencies.

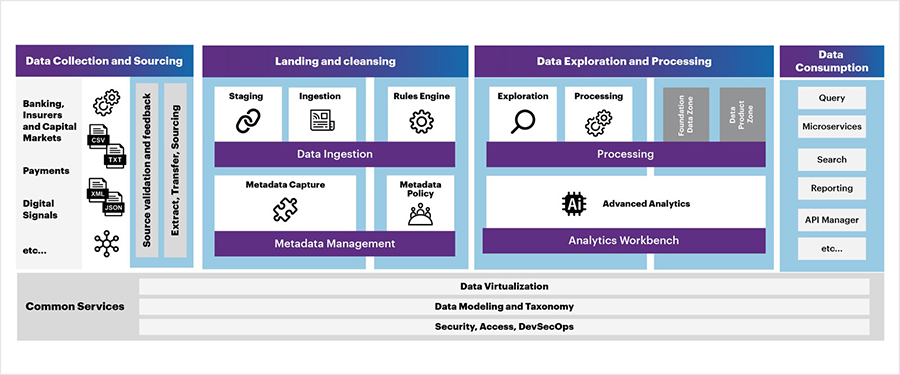

Data platform architecture

What central banks require is a platform that can harness the data needed. Such a platform would collect the data in real time (or as speedily as possible) and from a variety of sources, preferably directly, to increase granularity and reduce errors, then collate and analyze it according to the bank’s needs.

This platform should be able to collect data beyond just FIs and directly connect to institutions for both the pull and push of data demands.

In this way, the central bank can perform needs-based assessments of the state of companies, sectors and the broader financial system, while maintaining and adhering to data privacy and security guidelines, and carrying out its monetary, regulatory and supervisory roles to best effect. Such a platform would improve oversight, and could support banks and the market in areas like liquidity, credit risk, anti-money-laundering, fraud and financial risk management.

Creating such a platform requires that the central bank revisit its IT architecture and incorporate technologies like Big Data, artificial intelligence, machine learning and natural-language processing. Refreshing its architecture—which should include revising the central bank’s data-gathering and data-management processes—will position it to become a next-generation entity.

At the same time, this will drive standardization across the industry by requiring that FIs ensure connectivity with the central bank’s platform and comply with the requested standardization.

The data lifecycle and the data platform

The best way for a central bank to begin the assessment process is to understand its existing data lifecycle. That starts with determining the source of the data it uses and its type: for example, its frequency, granularity and whether it is structured or unstructured.

The next step is to see how that data is ingested into its systems. Often, different divisions of the central bank collect the data they need, yet cannot share it. This results in redundant data-collection processes and unnecessary overhead at central banks and sources. Additionally, the data needed by supervisory, monetary and regulatory departments often varies—with FIs, for example, required to file according to individual guidelines, in different formats and under varying timelines.

The final step is to assess the output stage, in which data is integrated then packaged for consumption, whether internal or external.

With that knowledge, the central bank can envision how to structure a data platform comprising five key areas (see diagram):

- Data collection and sourcing

- Data landing zone and cleansing

- Data exploration and processing

- Data consumption

- Common services

1. Data collection and sourcing

Central banks must be able to collect an array of static and dynamic data from a wide range of sources, and do so in a robust fashion. These sources include reports and filings from banks, insurers and other FIs; payments and transactions data; information published by the media, government departments and other central banks; market data and data drawn from other regulatory bodies. In the meantime, central banks should also aim for data standardization in the industry.

Additionally, central banks should cast the net more widely—for example, by using the concept of Digital Signal to Sales (DS2S), a sales and marketing tool that captures digital footprints related to market sentiment like consumer and business behavior. In this context, they would use similar DS2S technology to seek out indicators of default, fraud, liquidity issues at banks or solvency issues at a country level. Once captured, this dynamic data can be combined with static datasets in the platform’s data exploration and processing area, and analyzed to detect patterns, triggers and signal points.

2. Landing and cleansing

Data that is collected and sourced arrives in the landing zone, where it is compartmentalized according to different data groups and usage. This is also where metadata is captured, and where data is cataloged, profiled and classified.

This area assesses data in terms of its type (whether it is payments data or markets data, for instance), and conducts basic data-hygiene checks. Next, the data passes through rules engines for validations which could include cross-reporting-period validation. Quality and governance issues are taken care of here, including data protection, data standardization, data validation and anomaly detection. Having such a platform would help central banks overcome an additional challenge of the current approach: the collection of data from different sources and its placement in different silos, which makes cleansing a very repetitive exercise.

3. Data exploration and processing

This is the platform’s laboratory where data is democratized, analyzed and processed, and where data scientists employ advanced analytics tools to create meaningful insights from a combination of both static and dynamic data. For example, they could couple sources such as the statistical FI returns of different sectors with both domestic and global macroeconomic indicators, as well as with data from other government or international agencies, to derive an advanced analytical model of the financial sector’s risk exposure.

4. Data consumption

Central banks require different data for a variety of purposes. Some might use it for verification, others for regulatory and supervisory reasons in the form of reporting or dashboards. On top of that, there are possibilities of data sharing with different government entities, both locally and with their international counterparts. Regardless of the usage and its destination, this output stage is where data is integrated and packaged for internal or external consumption.

5. Common services

Underlying the platform are common services like data virtualization, data modeling and taxonomy, and aspects such as security and access control, metadata management and monitoring. These are essential to ensure the platform is consistent, agile in making change, transparent and secured.

A data platform that has the attributes described above would not only possess the necessary robustness, it would also enable central banks to be more flexible in collecting and analyzing data to sharpen their supervision, including by leveraging real-time data sources.

Lastly, it is important to note that success in creating and operating such a platform goes beyond technology. It is critical that the right talent is employed on it and that there is sufficient training to ensure staff can adopt and leverage these technologies. Additionally, the platform won’t function properly unless the central bank institutes the right processes in terms of its operations, data governance, data delivery and business intelligence.

Done right, such a solution constitutes the first pillar in becoming a next-generation digital regulator and positions central banks to extrapolate real-time data on a forward-looking basis.

In our next post, we will look at the second pillar in this process: how central banks can enable and leverage innovation internally and externally.

1 MAS Sets up Data Analytics Group, MAS , 2/14/2017